Chociaż spowolnienie gospodarcze osłabiło rosnące od wielu lat zapotrzebowanie na ekspertów IT, to nadal pozyskanie wykwalifikowanych pracowników stanowi istotne wyzwanie dla pracodawców. Kluczowym zadaniem dla organizacji w najbliższych latach stanie się zapewnienie deweloperom możliwości długofalowego rozwoju kariery, uwzględniających rosnącą rolę sztucznej inteligencji, wynika z raportu „What’s bugging IT”, opracowanego przez firmę doradczą Deloitte. Istotną kwestią jest także rozpoznanie potrzeb ekspertów technologicznych, którzy coraz częściej oczekują od pracodawców wsparcia w kwestiach związanych ze zdrowiem, a także elastyczności w zakresie miejsca i czasu pracy.

Przeprowadzone w czwartym kwartale 2023 r. badanie dotyczyło wyzwań, potrzeb i postaw pracowników działów IT wobec takich wyzwań jak postępująca automatyzacja. W ankiecie wzięło udział 300 respondentów, z których znaczna część pochodziła z Polski. Wśród nich znaleźli się zarówno przedstawiciele kadry menadżerskiej wysokiego i średniego szczebla, jak i osoby zatrudnione w roli specjalistów.

AI rewolucjonizuje IT

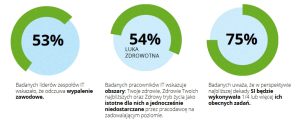

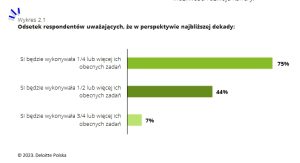

Podobnie jak wiele innych branż również sektor IT przechodzi dużą zmianę wynikającą z rosnącej popularności sztucznej inteligencji. Na pytanie dotyczące tego, jak duża część ich obecnych obowiązków zostanie przejęta przez AI, 44% ankietowanych wskazało połowę lub więcej. Zapytani o ich charakterystykę podawali bardzo szeroki przekrój badań, od analityki czy rutynowych procesów biznesowych poprzez tworzenie i przeprowadzanie testów po zarządzanie projektami i obsługę klienta.

Automatyzacja nie pozostanie bez wpływu na charakter pracy specjalistów IT. Zdaniem autorów raportu oznacza to wyzwanie dla pracodawców, którzy w odpowiedzi na zmieniającą się rzeczywistość powinni opracować narzędzia i procesy umożliwiające ekspertom długofalowy rozwój. Pewną nadzieję w tym obszarze daje przytoczona w raporcie wysoka skłonność profesjonalistów IT do samorozwoju i uczenia się. Zapytani o obszary, które ich zdaniem zyskają na znaczeniu w trakcie najbliższych 2-3 lat, sześciu na dziesięciu wskazało na kwestie bezpieczeństwa. Z kolei 41 proc. odpowiedzi dotyczyło generatywnej AI, a co trzeci ankietowany za najistotniejsze uznał technologie chmurowe.

Rosnąca powszechność generatywnej sztucznej inteligencji stanie się motorem istotnych zmian w funkcjonowaniu przedsiębiorstw. Z tego względu organizacje powinny umożliwiać ekspertom IT rozwój kompetencji technologicznych oraz tych, które dotyczą behawiorystyki czy psychologii. Umożliwi to bowiem dostosowanie poziomów ich wiedzy do aktualnych potrzeb rynku, co może przynieść wymierne korzyści nie tylko im samym, ale i całym organizacjom. Nie bez znaczenia są także predyspozycje specjalistów technologicznych, dzięki którym mogą się oni stać liderami procesu adopcji nowych technologii w swoich organizacjach

– mówi John Guziak, partner, Human Capital, Deloitte.

Wypalenie zagrożeniem dla IT

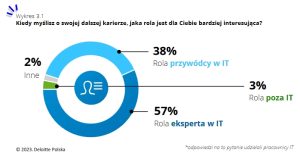

W ramach badania Deloitte eksperci zostali zapytani o swoje odczucia dotyczące obecnie wykonywanej pracy. Okazuje się, że rosnącym zagrożeniem dla branży jest kwestia wypalenia zawodowego, które ma odczuwać aż 53 proc. liderów zespołów IT oraz ponad jedna czwarta ankietowanych specjalistów. Przyczyny tego zjawiska są zróżnicowane, wśród nich wymienia się m.in. brak równowagi między życiem prywatnym a zawodowym, nadmiar obowiązków czy ogólny brak satysfakcji z wykonywanej pracy. Powszechność wypalenia zawodowego może być jedną z przyczyn niewielkiego odsetka chętnych do objęcia w przyszłości posady lidera działu IT. Osoby widzące siebie w tej roli stanowią zaledwie 38 proc. badanych, podczas gdy znaczna większość (58 proc.) ankietowanych planuje rozwój w stronę eksperta technologicznego. Za czynniki powodujące niezadowolenie i skłaniające do rozważenia zmiany zawodu lub pracodawcy ankietowani wymieniają najczęściej niesatysfakcjonujący poziom wynagrodzenia (46 proc.), brak możliwości rozwoju (29 proc.) oraz nieefektywne procesy w organizacji (24 proc.).

Praca zdalna a efektywność

Utrzymanie odpowiedniego poziomu zatrudnienia w działach IT wymaga od pracodawców rozpoznania i wyjścia naprzeciw potrzebom specjalistów technologicznych. To, czy są one zaspokajane, pokazuje przytoczona w badaniu luka wsparcia, czyli różnica między odsetkiem respondentów, którzy otrzymali wsparcie od pracodawcy w danym obszarze oraz rozkładem odpowiedzi wskazujących na znaczenie danego obszaru dla respondenta. Największą różnicę widać w sferze wolnego czasu (-51 proc.), zdrowia bliskich uczestników badania (-46 proc.), jak i kwestii zdrowego trybu życia (-40 proc.). Najmniejsza różnica między oczekiwaniami a rzeczywistym wsparciem dotyczy możliwości pracy zdalnej (-8 proc.), elastycznych form zatrudnienia i czasu pracy (-6 proc.) oraz możliwości osobistego udziału w akcjach charytatywnych (13 proc.).

Wyniki zapytań dotyczących luki wsparcia pokazują blaski i cienie pracy zdalnej. Z jednej strony pracodawcy są świadomi jej znaczenia, z drugiej ma ona swoje negatywne konsekwencje. Wzrost popularności wykonywania obowiązków zawodowych na odległość niekiedy wiąże się z upowszechnieniem mniej aktywnego trybu życia, co może prowadzić do wzrostu liczby przypadków chorób cywilizacyjnych. Z tego względu pracodawcy powinni zaoferować wsparcie w postaci, np. porady dietetyka lub trenera personalnego czy szkolenia z zakresu profilaktyki chorób. Tego typu inicjatywy mogą korzystnie wpłynąć na samopoczucie pracownika i utrzymanie odpowiedniego poziomu zatrudnienia w działach IT – mówi Zbigniew Łobocki, senior manager, Human Capital, Deloitte.

Powszechność pracy zdalnej w sektorze IT rodzi zagrożenia nie tylko w zakresie dobrostanu pracowników. Przedmiotem nieustannej dyskusji jest także kwestia efektywności działań realizowanych przez zespoły technologiczne pracujące na odległość. Zdaniem autorów raportu taki sposób działania powinien być odpowiednio zaplanowany. Kluczowe w tym obszarze mogą okazać się nawyki i stałe elementy działania zespołów, które powinny dotyczyć nie tylko bieżących kwestii, ale także planowania długofalowego, przekazywania informacji zwrotnych czy budowania relacji.

By Marek Grzybowski

By Marek Grzybowski